An Interview with Spencer Collins by Dr Jenn Chubb

“Sonic narratives matter but not everyone is experiencing the same thing”: What AI sounds like to a Deaf person.

In a previous post for this blog, I suggested that a failure to take into account the significance of sonic framing when considering visual narratives of AI limited the capacity to fully broaden a public understanding of AI. I now explore the potential which sound and music hold in relation to communicating the realities of AI, and what we can learn from someone who does not hear them but accesses them via different means.

Undeniably, music has power. We use it to make us feel good, to change how we think/feel, to distract us or help us concentrate, to help us run faster or slow down and relax. Music manipulates how we perceive people, concepts, or things. When music is used in storytelling, it is chosen for the resonances and emotional overtones that it brings to the topic, capable of framing a story with fear, sadness or joy (Brown & Volgsten, 2005).

In screen media, this power is used liberally to create the pace and the framing of narratives; one need look no further than the two notes and a rising tempo which has forever impacted society’s perception of sharks. When paired with factual content, like documentaries, music has the power to make the audience take sides on a narrative that is notionally objective. This is especially pertinent for new technologies, where our initial introduction and perception is shaped by the narratives presented in media and we are likely to have little direct lived experience.

The effect of background sound and music for documentaries has been largely overlooked in research (Rogers, 2015). In particular, the effect of sonic framing for storytelling about AI is little explored, and even less so for audiences with disabilities, who may already find themselves disadvantaged by certain emerging technologies (Trewin et al., 2019)

This blog reflects on the sonic framing of Artificial Intelligence (AI) in screen media and documentary. It focuses particularly on the effects of sonic framing for those who may perceive the audio differently to its anticipated reception due to hearing loss, as part of a wider project on the sonic framing of AI in documentary. I will be drawing on a case study interview with a profoundly deaf participant, arguing that sonic narratives do matter in storytelling, but that not everyone is experiencing the same thing.

For those who perceive music either through feeling the vibrations with the TV turned up high, or described in subtitles, lighter motifs are lost and only the driving beats of an ominous AI future are conveyed. This case study also reveals that the assumed audience point of view on AI technology is likely much more negative for disabled audience members- this is not unlike the issues with imagery and AI which can serve to distract or distort. All this feeds into a wider discussion surrounding what we might refer to as responsible storytelling – fair for people with disabilities.

I had the pleasure of chatting to Spencer Collins, an experienced Equality, Diversity and Inclusion Advisor in the private, public, and NGOs sectors. We chatted about AI technologies, imaginaries of how AI might sound, and barriers to engagement, especially with regards to conversational AI. We also discussed the effect his disability has on his experience of storytelling.

“Sonic narratives matter but not everyone is experiencing the same thing.”

Spencer tells me from the start that “AI technologies are not set up for people like me.” He does so as the caption on our Zoom call churns out what most closely resembles nonsense, providing me with an early indication of some of the very real challenges Spencer faces on an everyday basis. Luckily, Spencer can lip-read and I am keen to hear what he has to say.

What becomes clear is that while AI has the potential to massively impact the lives of people living with a disability, they don’t work properly for people with disabilities yet. What is also clear is the role that imagery and sound together have in influencing perceptions of technology in storytelling. Importantly, Spencer feels there is a need to involve people with disabilities in the development of technology and in the stories that promote it. He asks, “have companies really spoken to people with disabilities? How safe and useful is AI for people like me?”

Can you introduce yourself?

I’m Spencer, with exceptional skills and experience for more than 18 years in the private, public, and NGOs sectors. Many companies are struggling to ensure their Equality and Inclusion policies and procedures really reflect the needs of their businesses and employees, their self-esteem, perceived worth and improving company productivity. So I help with that.

I am profoundly deaf. I come from a hearing family. I am very lucky that my mother has been so great in helping me get to the best speech therapist in the world. But it is tough.

How do you perceive the visual representation of AI in the media?

Good question. Having grown up watching ‘Mr Benn’, the character transformed himself into another character or person in an alternate universe similar to the world of Avatar when he transformed himself to become another person. There was a lot of diversity in the play, and Avatar is supposed to be equally interesting. I am aware that there is native and Asian representation, but I wonder how AI portrays disabled characters?

I often ask myself, “Do I trust everything I see, equally so as when my hands touch something I believe what my eyes see?”

Do you have any comments on our alternative images in the BIOAI database?

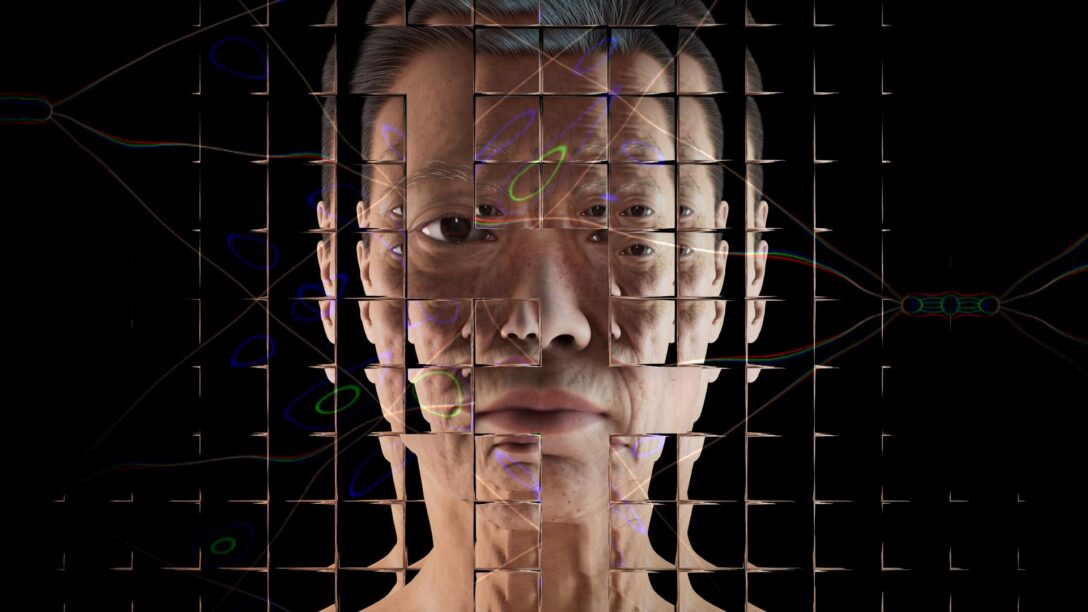

Better Images of AI embraces honesty and authenticity, as well as rigorous facts for the audience. I’m interested to see how artificial intelligence converts the artist’s emotional state, brushwork, and inner state into tangible artworks by translating the work of Picasso, Frank Stella, etc into photographs, the algorithm can recognise the truth of the image. This is the question I need to observe.

There is a lot of interest in images of AI. I feel as though image and sound are intertwined. Do you have an imagination of what AI sounds like?

The hard thing about technology is that there is a gray area – it doesn’t work for everyone. The dancer in ‘Strictly come dancing’ – relies on vibrations. Deaf people can feel the vibrations, so that’s not coming from a hearing aid.

We have a sixth sense. We are like animals. We can see sound, we can feel something. I have no hearing but superb senses, I can see in the dark, I can lip-read. We taught ourselves how to use our senses. Technology can’t identify this, it will take a long time for technology to be ready.

I’m a musician, so I can relate to this when you describe how you ‘feel’ sound – thinking about storytelling, which uses sound so often to influence audiences, if you were to imagine science fiction, for instance, what kind of sound do you sense from these stories?

When I watch Robocop – I have always wanted to be a Pilot/Traffic Patrol, but I get a lot of rejections because of my disability. You need to have 20/20 hearing. Same with the air force – going back to Robocop, I remember some kind of AI robot, he had a power in him, like a spider-man. A strength and power. I remember when I was watching at one point it was all about the audio. You could pick up the translation from the telephone. That would be wonderful for the Deaf community.

Interestingly the U.S. has introduced a new development where every sound can be identified for Deaf and Hard of Hearing people, so they can hear everything like any other person. It is something that you could check out.

Sometimes when watching a story, you get a physical reaction, you get a shock. Science fiction might seem very loud to me, which might make the concepts seem more frightening.

How do you interpret sound?

Use of colour and visualizations become really important. I rely on noise, I rely on anything loud, so I can feel the vibrations. Beethoven was profoundly deaf. He cut off the legs of the piano, to hear the difference between high and low note vibrations. Space and distance from technology is important.

Is there an argument for more immersive storytelling and tools?

VR therapy (using AI) is being used and this is a massive moment for people with a range of challenges such as dementia.

Immersive tools are not quite ready though. Think of the ‘metaverse’ – one of the questions is these ‘Minecraft’ type characters. They don’t have any body features, e.g., lips, etc, so this is not ready for people who are hard of hearing. These characters are developed for people like you, not like me. One answer is to use captions, but companies say that that would take away from what was happening around the room – this is not equal.

The Gather-town gaming platform is another example of diverse forms of communication. The most confusing part there is people are talking, but I don’t know, I can’t tell who is talking. These platforms are very black and white. A colour code could be helpful, so we know who is talking.

How do we look into making sound more accessible?

The first step to investigating this kind of AI is learning how to classify music, and then how to feed this information to the machine so that it can be interpreted by Deaf users visually. In order to determine how the Deaf community currently enjoys music through vibration, and what cues they feel accurately portray the sound, is another question.

Recently I watched a film where a robot was talking and I could not tell what it was saying. I thought – if that was me – I would not have a clue, no visual clue at all. No lips, no movement. It would not do me any good.

Do you use AI in the home?

I have got Alexa – but it doesn’t recognise my speech. If I say something, it completely misunderstands me. I love music, I play all types of music but using AI Apple iTunes, it doesn’t even recognise me. It cannot play music for me. I have to use my hands.

I know some friends have a [echo] dot that can talk…to turn the light on etc, but I can’t get it to recognise me. That is a worry when we want someone to do a job on our behalf. We don’t want gimmicks: will the product deliver 100% equally to everyone?

Would it be useful for AI narratives to represent sound visually?

Just imagine you’re watching any music film and the closed captioning is on, but they don’t really give the sound too. Imagine if that’s on the screen too. The company will say ‘no, forget it, we don’t want more captioning over the picture’. How can you incorporate the sound and the text- I don’t have the answer to that. If there was something for sound, that would be great.

The relationship between image and sound seems very important. For instance, documentarians tend to start with the image when they sound design a documentary about AI. In what ways do you feel they link together?

In the same way as Digital Imagery, cinema and games are made up of CGI models that give a realistic look to delight the audience through sound and image. If you go to the cinema and surround sound, oh my god, it’s amazing. You can feel it when it’s so loud. It’s fantastic. It’s an experience. I was watching Click and they created a code plugged into the guitar. Visualizing sound. Maybe that is the way forward.

Is the future of AI positive?

AI can transform the lives of disabled people. Many advances have been made but they offer too much promise to individuals. It’s crucial to bring diverse voices into the design and development of AI.

In what ways, if any, does being deaf impact your relationship with AI?

Take ‘Story Sign’ for example, which is powered by Huawei AI and developed with the European Union for the Deaf and reads children’s books and translates them into sign language. There have been impacts when the US government believes Huawei is a threat to worldwide cybersecurity. How can we tell if artificial intelligence is safe for the Deaf community? These are the questions I am concerned about.

Documentary is one instance where the story is about documenting fact – what kinds of ways might sonic narratives be used to influence the audience? Do you think they matter?

As a former DJ, I encountered a new way of experiencing music through vibrations when played from the ground to above, which challenged my audience to consider hearing vs. feeling.

Are there any metaphors you can imagine about AI and whether there is a link between those we think of when we consider imagery?

Imaginary language uses figurative language to describe objects, actions, and ideas in a way that appeals to the physical senses and helps audiences to envision the scene as if it were real. It is not always accurate to call something an AI image. Visual and sounds can be described by action, but tastes, physical sensations, and smells can’t.

So what priorities should we have around future tech development?

We need to think about how we can compromise equally, in a fair and transparent way. I personally feel tech would make life a lot easier for people with disabilities, but from the other side, I am frightened.

Talking about care homes and robot carers, I wonder, how will that fit someone like me? It just removes the human entirely. We are too afraid about what will happen next. There is always a fear.

Like anything we are being watched by Big Brother, it’s the same principle for the future of AI, it’s going to be a challenge. It will work for some people, but not all of them. You have fake news saying how wonderful AI is for people with disabilities, but they don’t necessarily ask what those people want.

Are there ways to make AI narratives more inclusive for deaf people?

It’s probably worth reading this report – technology is not an inherent ‘good for disabled people’.

Recommended: How do blind people imagine AI?