“The currently pervasive images of AI make us look somewhere, at the cost of somewhere else.”

In this blog post, Dominik Vrabič Dežman provides a summary of his recent research article, ‘Promising the future, encoding the past: AI hype and public media imagery‘.

Dominik sheds light on the importance of the Better Images of AI library which fosters a more informed, nuanced public understanding of AI by breaking the stronghold of the “deep blue sublime” aesthetic with more diverse and meaningful representations of AI.

Dominik also draws attention to the algorithms which perpetuate the dominance of familiar and sensationalist visuals and calls for movements which reshape media systems to make better images of AI more visible in public discourse.

The full paper is published in the AI and Ethics Journal’s special edition on ‘The Ethical Implications of AI Hype, a collection edited by We and AI.

AI promises innovation, yet its imagery remains trapped in the past. Deep-blue, sci-fi-inflected visuals have flooded public media, saturating our collective imagination with glowing, retro-futuristic interfaces and humanoid robots. These “deep blue sublime” [1] images, which draw on a steady palette of outdated pop-cultural tropes and clichés, do not merely depict AI — they shape how we think about it, reinforcing grand narratives of intelligence, automation, and inevitability [2]. It takes little to acknowledge that the AI discussed in public media is far from the ethereal, seamless force these visuals disclose. Instead, the term generally refers to a sprawling global technological enterprise, entangled with labor exploitation, ecological extraction, and financial speculation [3–10] — realities conspicuously absent from its dominant public-facing representations.

The widespread rise of these images is suspended against intensifying “AI hype” [11], which has been compared to historical speculative investment bubbles [12,13]. In my recent research [1,14,15], I join a growing body of research looking into images of AI [16–21], to explore how AI images operate at the intersection of aesthetics and politics. My overarching ambition has been to contribute an integrated account of the normative and the empirical dimensions of public images of AI to the literature. I’ve explored how these images matter politically and ethically, inseparable from the pathways they take in real-time, echoing throughout public digital media and wallpapering it in seen-before denominations of blue monochrome.

Rather than measuring the direct impact of AI imagery on public awareness, my focus has been on unpacking the structural forces that produce and sustain these images. What mechanisms dictate their circulation? Whose interests do they serve? How might we imagine alternatives? My critique targets the visual framing of AI in mainstream public media — glowing, abstract, blue-tinted veneers seen daily by millions on search engines, institutional websites, and in reports on AI innovation. These images do not merely aestheticize AI; they foreclose more grounded, critical, and open-ended ways of understanding its presence in the world.

The Intentional Mindlessness of AI Images

Google Images search results for “artificial intelligence”. January 14, 2025. Search conducted from an anonymised instance of Safari. Search conducted from Amsterdam, Netherlands.

Recognizing the ethico-political stakes of AI imagery begins with acknowledging that what we spend our time looking at, or not looking beyond, matters politically and ethically. The currently pervasive images of AI make us look somewhere, at the cost of a somewhere else. The sheer volume of these images, and their dominance in public media, slot public perception into repetitive grooves dominated by human-like robots, glowing blue interfaces, and infinite expanses of deep-blue intergalactic space. By monopolizing the sensory field through which AI is perceived, they reinforce sci-fi clichés, and more importantly, obscure the material realities — human labor, planetary resources, material infrastructures, and economic speculation — that drive AI development [22,23].

In a sense, images of AI could be read as operational [24–27], enlisted in service of an operation which requires them to look, and function, the way they do. This might involve their role in securing future-facing AI narratives, shaping public sentiment towards acceptance of AI innovation, and supporting big tech agendas for AI deployment and adoption. The operational nature of AI imagery means that these images cannot be studied purely as an aesthetic artifact, or autonomous works of aesthetic production. Instead, these images are minor actors, moving through technical, cultural and political infrastructures. In doing so, individual images do not say or do much per se – they are always already intertwined in the circuits of their economic uptake, circulation, and currency; not at the hands of the digital labourers who created them, but of the human and algorithmic actors that keep them in circulation.

Simultaneously, the endurance of these images is less the result of intention than of a more mindless inertia. It quickly becomes clear how these images do not reflect public attitudes, nor of their makers; anonymous stock-image producers, digital workers mostly located in the global South [28]. They might reflect the views of the few journalistic or editorial actors that choose the images in their reporting [29], or are simply looking to increase audience engagement through the use of sensationalist imagery [30]. Ultimately, their visibility is in the hands of algorithms rewarding more of the same familiar visuals over time [1,31], of stock image platforms and search engines, which maintain close ties with media conglomerates [32], which, in turn, have long been entangled with big tech [33]. The stock images are the detritus of a digital economy that rewards repetition over revelation: endlessly cropped, upscaled, and regurgitated “poor images” [34], travelling across cyberspace as they become recycled, upscaled, cropped, reused, until they are pulled back into circulation by the very systems they help sustain [15,28].

AI as Ouroboros: Machinic Loops and Recursive Aesthetics

As algorithms increasingly dictate who sees what in the public sphere [35–37], they dictate not only what is seen but also what is repeated. Images of AI become ensnared in algorithmic loops, which sediment the same visuality over time on various news feeds and search engines [15]. This process has intensified with the proliferation of generative AI: as AI-generated content proliferates, it feeds on itself—trained on past outputs, generating ever more of the same. This “closing machinic loop” [15,28] perpetuates aesthetic homogeneity, reinforcing dominant visual norms rather than challenging them. The widespread adoption of AI-generated stock images further narrows the space for disruptive, diverse, and critical representations of AI, making it increasingly difficult for alternative images to surface in public visibility.

ChatGPT 4o output for query: “Produce an image of ‘Artificial Intelligence’”. 14 January 2025.

Straddling the Duality of AI Imagery

In critically examining AI imagery, it is easy to veer into one of two deterministic extremes — both of which risk oversimplifying how these images function in shaping public discourse:

- Overemphasizing Normative Power:

This approach risks treating AI images as if they have autonomous agency, ignoring the broader systems that shape their circulation. AI images appear as sublime artifacts—self-contained objects for contemplation, removed from their daily life as fleeting passengers in the digital media image economy. While the production of images certainly exerts influence in shaping socio-technical imaginaries [38,39], they operate within media platforms, economic structures, and algorithmic systems that constrain their impact.

2. Overemphasizing Materiality:

This perspective reduces AI to mere infrastructure, seeing images as passive reflections of technological and industrial processes, rather than an active participant in shaping public perception. From this view, AI’s images are dismissed as epiphenomenal, secondary to the “real” mechanisms of AI’s production: cloud computing, data centers, supply chains, and extractive labor. In reality, AI has never been purely empirical; cultural production has been integral to AI research and development from the outset, with speculative visions long driving policy, funding, and public sentiment [40].

Images of AI are neither neutral nor inert. The current diminishing potency of glowing, sci-fi-inflected AI imagery as a stand-in for AI in public media suggests a growing fatigue with their clichés, and cannot be untangled from a general discomfort with AI’s utopian framing, as media discourse pivots toward concerns over opacity, power asymmetries, and scandals in its implementation [29,41]. A robust critique of the cultural entanglements of AI requires addressing both its normative commitments (promises made to the public), and its empirical components (data, resources, labour; [6]).

Toward Better Images: Literal Media & Media Literacy

Given the embeddedness of AI images within broader machinations of power, the ethics of AI images are deeply tied to public understanding and awareness of such processes. Cultivating a more informed, critical public — through exposure to diverse and meaningful representations of AI — is essential to breaking the stronghold of the deep blue sublime.

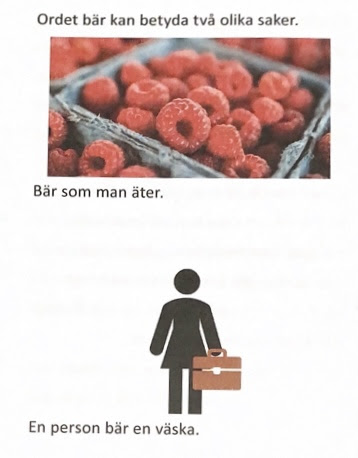

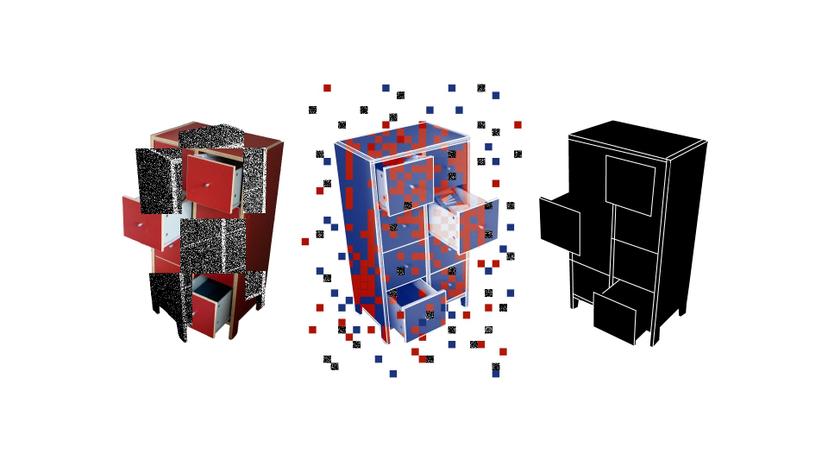

At the individual level, media literacy equips the public to critically engage with AI imagery [1,42,43]. By learning to question the visual veneers, people can move beyond passive consumption of the pervasive, reductive tropes that dominate AI discourse. Better images recalibrate public perception, offering clearer insights into what AI is, how it functions, and its societal impact.The kind of images produced are equally important. Better images would highlight named infrastructural actors, document AI research and development, and/or, diversify the visual associations available to us, loosening the visual stronghold of the currently dominant tropes.

This greatly raises the bar for news outlets in producing original imagery of didactic value, which is where open-source repositories such as Better Images of AI serve as invaluable resources. This crucially bleeds into the urgency for reshaping media systems, making better images readily available to creators and media outlets, helping them move away from generic visuals toward educational, thought-provoking imagery. However, creating better visuals is not enough; they must become embedded into media infrastructure to become the norm rather than the exception.

Given the above, the role of algorithms cannot be ignored. As mentioned above, algorithms drive what images are seen, shared, and prioritized in public discourse. Without addressing these mechanisms, even the most promising alternatives risk being drowned by the familiar clichés. Rethinking these pathways is essential to ensure that improved representations can disrupt the existing visual narrative of AI.

Efforts to create better AI imagery are only as effective as their ability to reach the public eye and disrupt the dominance of the “deep blue sublime” aesthetic in public media. This requires systemic action—not merely producing different images in isolation, but rethinking the networks and mechanisms through which these images are circulated. To make a meaningful impact, we must address both the sources of production and the pathways of dissemination. By expanding the ways we show, think about, and engage with AI, we create opportunities for political and cultural shifts. A change in one way of sensing AI (writing / showing / thinking / speaking) invariably loosens gaps for a change in others.

Seeing AI ≠ Believing AI

AI is not just a technical system; it is a speculative, investment-driven project, a contest over public consensus, staged by a select few to cement its inevitability [44]. The outcome is a visual regime that detaches AI’s media portrayal from its material reality: a territorial, inequitable, resource-intensive, and financially speculative global enterprise.

Images of AI come from somewhere (they are products of poorly-paid digital labour, served through algorithmically-ranked feeds), do something (torque what is at-hand for us to imagine with, directing attention away from AI’s pernicious impacts and its growing inequalities), and go somewhere (repeat themselves ad nauseam through tightening machinic loops, numbing rather than informing; [16]).

The images have left few fooled, and represent a missed opportunity for adding to public sensitisation and understanding regarding AI. Crucially, bad images do not inherently disclose bad tech, nor do good images promote good tech; the widespread adoption of better images of AI in public media would not automatically lead to socially good or desirable understandings, engagements, or developments of AI. That remains the issue of the current political economy of AI, whose stakeholders only partially determine this image economy. Better images alone cannot solve this, but they might open slivers of insight into AI’s global “arms race.”

As it stands, different visual regimes struggle to be born. Fostering media literacy, demanding critical representations, and disrupting the algorithmic stranglehold on AI imagery are acts of resistance. If AI is here to stay, then so too must be our insistence on seeing it otherwise — beyond the sublime spectacle, beyond inevitability, toward a more porous and open future.

About the author

Dominik Vrabič Dežman (he/him) is an information designer and media philosopher. He is currently at the Departments of Media Studies and Philosophy at the University of Amsterdam. Dominik’s research interests include public narratives and imaginaries of AI, politics and ethics of UX/UI, media studies, visual communication and digital product design.

References

1. Vrabič Dežman, D.: Defining the Deep Blue Sublime [Internet]. SETUP; (2023). 2023. https://web.archive.org/web/20230520222936/https://deepbluesublime.tech/

2. Burrell, J.: Artificial Intelligence and the Ever-Receding Horizon of the Future [Internet]. Tech Policy Press. (2023). 2023 Jun 6. https://techpolicy.press/artificial-intelligence-and-the-ever-receding-horizon-of-the-future/

3. Kponyo, J.J., Fosu, D.M., Owusu, F.E.B., Ali, M.I., Ahiamadzor, M.M.: Techno-neocolonialism: an emerging risk in the artificial intelligence revolution. TraHs [Internet]. (2024 [cited 2025 Feb 18]. ). https://doi.org/10.25965/trahs.6382

4. Leslie, D., Perini, A.M.: Future Shock: Generative AI and the International AI Policy and Governance Crisis. Harvard Data Science Review [Internet]. (2024 [cited 2025 Feb 18]. ). https://doi.org/10.1162/99608f92.88b4cc98

5. Regilme, S.S.F.: Artificial Intelligence Colonialism: Environmental Damage, Labor Exploitation, and Human Rights Crises in the Global South. SAIS Review of International Affairs. 44:75–92. (2024. ). https://doi.org/10.1353/sais.2024.a950958

6. Crawford, K.: The atlas of AI power, politics, and the planetary costs of artificial intelligence [Internet]. (2021). https://www.degruyter.com/isbn/9780300252392

7. Sloane, M.: Controversies, contradiction, and “participation” in AI. Big Data & Society. 11:20539517241235862. (2024. ). https://doi.org/10.1177/20539517241235862

8. Rehak, R.: On the (im)possibility of sustainable artificial intelligence. Internet Policy Review [Internet]. ((2024 Sep 30). ). https://policyreview.info/articles/news/impossibility-sustainable-artificial-intelligence/1804

9. Wierman, A., Ren, S.: The Uneven Distribution of AI’s Environmental Impacts. Harvard Business Review [Internet]. ((2024 Jul 15). ). https://hbr.org/2024/07/the-uneven-distribution-of-ais-environmental-impacts

10. : What we don’t talk about when we talk about AI | Joseph Rowntree Foundation [Internet]. (2024). 2024 Feb 8. https://www.jrf.org.uk/ai-for-public-good/what-we-dont-talk-about-when-we-talk-about-ai

11. Duarte, T., Barrow, N., Bakayeva, M., Smith, P.: Editorial: The ethical implications of AI hype. AI Ethics. 4:649–51. (2024. ). https://doi.org/10.1007/s43681-024-00539-x

12. Singh, A.: The AI Bubble [Internet]. Social Science Encyclopedia. (2024). 2024 May 28. https://www.socialscience.international/the-ai-bubble

13. Floridi, L.: Why the AI Hype is Another Tech Bubble. Philos Technol. 37:128. (2024. ). https://doi.org/10.1007/s13347-024-00817-w

14. Vrabič Dežman, D.: Interrogating the Deep Blue Sublime: Images of Artificial Intelligence in Public Media. In: Cetinic E, Del Negueruela Castillo D, editors. From Hype to Reality: Artificial Intelligence in the Study of Art and Culture. Rome/Munich: HumanitiesConnect; (2024). https://doi.org/10.48431/hsah.0307

15. Vrabič Dežman, D.: Promising the future, encoding the past: AI hype and public media imagery. AI Ethics [Internet]. (2024 [cited 2024 May 7]. ). https://doi.org/10.1007/s43681-024-00474-x

16. Romele, A.: Images of Artificial Intelligence: a Blind Spot in AI Ethics. Philos Technol. 35:4. (2022. ). https://doi.org/10.1007/s13347-022-00498-3

17. Singler, B.: The AI Creation Meme: A Case Study of the New Visibility of Religion in Artificial Intelligence Discourse. Religions. 11:253. (2020. ). https://doi.org/10.3390/rel11050253

18. Steenson, M.W.: A.I. Needs New Clichés [Internet]. Medium. (2018). 2018 Jun 13. https://web.archive.org/web/20230602121744/https://medium.com/s/story/ai-needs-new-clich%C3%A9s-ed0d6adb8cbb

19. Hermann, I.: Beware of fictional AI narratives. Nat Mach Intell. 2:654–654. (2020. ). https://doi.org/10.1038/s42256-020-00256-0

20. Cave, S., Dihal, K.: The Whiteness of AI. Philos Technol. 33:685–703. (2020. ). https://doi.org/10.1007/s13347-020-00415-6

21. Mhlambi, S.: God in the image of white men: Creation myths, power asymmetries and AI [Internet]. Sabelo Mhlambi. (2019). 2019 Mar 29. https://web.archive.org/web/20211026024022/https://sabelo.mhlambi.com/2019/03/29/God-in-the-image-of-white-men

22. : How to invest in AI’s next phase | J.P. Morgan Private Bank U.S. [Internet]. Accessed 2025 Feb 18. https://privatebank.jpmorgan.com/nam/en/insights/markets-and-investing/ideas-and-insights/how-to-invest-in-ais-next-phase

23. Jensen, G., Moriarty, J.: Are We on the Brink of an AI Investment Arms Race? [Internet]. Bridgewater. (2024). 2024 May 30. https://www.bridgewater.com/research-and-insights/are-we-on-the-brink-of-an-ai-investment-arms-race

24. Paglen, T.: Operational Images. e-flux journal. 59:3. (2014. ).

25. Pantenburg, V.: Working images: Harun Farocki and the operational image. Image Operations. Manchester University Press; p. 49–62. (2016).

26. Parikka, J.: Operational Images: Between Light and Data [Internet]. (2023). 2023 Feb. https://web.archive.org/web/20230530050701/https://www.e-flux.com/journal/133/515812/operational-images-between-light-and-data/

27. Celis Bueno, C.: Harun Farocki’s Asignifying Images. tripleC. 15:740–54. (2017. ). https://doi.org/10.31269/triplec.v15i2.874

28. Romele, A., Severo, M.: Microstock images of artificial intelligence: How AI creates its own conditions of possibility. Convergence: The International Journal of Research into New Media Technologies. 29:1226–42. (2023. ). https://doi.org/10.1177/13548565231199982

29. Moran, R.E., Shaikh, S.J.: Robots in the News and Newsrooms: Unpacking Meta-Journalistic Discourse on the Use of Artificial Intelligence in Journalism. Digital Journalism. 10:1756–74. (2022. ). https://doi.org/10.1080/21670811.2022.2085129

30. De Dios Santos, J.: On the sensationalism of artificial intelligence news [Internet]. KDnuggets. (2019). 2019. https://www.kdnuggets.com/on-the-sensationalism-of-artificial-intelligence-news.html/

31. Rogers, R.: Aestheticizing Google critique: A 20-year retrospective. Big Data & Society. 5:205395171876862. (2018. ). https://doi.org/10.1177/2053951718768626

32. Kelly, J.: When news orgs turn to stock imagery: An ethics Q & A with Mark E. Johnson [Internet]. Center for Journalism Ethics. (2019). 2019 Apr 9. https://ethics.journalism.wisc.edu/2019/04/09/when-news-orgs-turn-to-stock-imagery-an-ethics-q-a-with-mark-e-johnson/

33. Papaevangelou, C.: Funding Intermediaries: Google and Facebook’s Strategy to Capture Journalism. Digital Journalism. 0:1–22. (2023. ). https://doi.org/10.1080/21670811.2022.2155206

34. Steyerl, H.: In Defense of the Poor Image. e-flux journal [Internet]. (2009 [cited 2025 Feb 18]. ). https://www.e-flux.com/journal/10/61362/in-defense-of-the-poor-image/

35. Bucher, T.: Want to be on the top? Algorithmic power and the threat of invisibility on Facebook. New Media & Society. 14:1164–80. (2012. ). https://doi.org/10.1177/1461444812440159

36. Bucher, T.: If…Then: Algorithmic Power and Politics. Oxford University Press; (2018).

37. Gillespie, T.: Custodians of the internet: platforms, content moderation, and the hidden decisions that shape social media. New Haven: Yale University Press; (2018).

38. Jasanoff, S., Kim, S.-H., editors.: Dreamscapes of Modernity: Sociotechnical Imaginaries and the Fabrication of Power [Internet]. Chicago, IL: University of Chicago Press; Accessed 2022 Jun 26. https://press.uchicago.edu/ucp/books/book/chicago/D/bo20836025.html

39. O’Neill, J.: Social Imaginaries: An Overview. In: Peters MA, editor. Encyclopedia of Educational Philosophy and Theory [Internet]. Singapore: Springer Singapore; p. 1–6. (2016). https://doi.org/10.1007/978-981-287-532-7_379-1

40. Law, H.: Computer vision: AI imaginaries and the Massachusetts Institute of Technology. AI Ethics [Internet]. (2023 [cited 2024 Feb 25]. ). https://doi.org/10.1007/s43681-023-00389-z

41. Nguyen, D., Hekman, E.: The news framing of artificial intelligence: a critical exploration of how media discourses make sense of automation. AI & Soc. 39:437–51. (2024. ). https://doi.org/10.1007/s00146-022-01511-1

42. Woo, L.J., Henriksen, D., Mishra, P.: Literacy as a Technology: a Conversation with Kyle Jensen about AI, Writing and More. TechTrends. 67:767–73. (2023. ). https://doi.org/10.1007/s11528-023-00888-0

43. Kvåle, G.: Critical literacy and digital stock images. Nordic Journal of Digital Literacy. 18:173–85. (2023. ). https://doi.org/10.18261/njdl.18.3.4

44. Tacheva, Z., Appedu, S., Wright, M.: AI AS “UNSTOPPABLE” AND OTHER INEVITABILITY NARRATIVES IN TECH: ON THE ENTANGLEMENT OF INDUSTRY, IDEOLOGY, AND OUR COLLECTIVE FUTURES. AoIR Selected Papers of Internet Research [Internet]. (2024 [cited 2025 Feb 18]. ). https://doi.org/20250206083707000